What is AI bias?

AI bias is an anomaly in the output of machine learning algorithms. These could be due to the prejudiced assumptions made during the algorithm development process or prejudices in the training data.

Types of AI bias

- Cognitive biases: These are effective feelings towards a person or a group based on their perceived group membership. More than 180 human biases have been defined and classified by psychologists, and each can affect individuals we make decisions. These biases could seep into machine learning algorithms via either:

- Designers unknowingly introducing them to the model.

- A training data set which includes those biases.

- Lack of complete data: If data is not complete, it may not be representative and therefore it may include bias. For example, most psychology research studies include results from undergraduate students which are a specific group and do not represent the whole population.

Examples of AI bias

- Amazon’s biased recruiting tool

With the dream of automating the recruiting process, Amazon started an AI project in 2014. Their project was solely based on reviewing job applicants’ resumes and rating applicants by using AI-powered algorithms so that recruiters don’t spend time on manual resume screen tasks. However, by 2015, Amazon realized that their new AI recruiting system was not rating candidates fairly and it showed bias against women.

Amazon had used historical data from the last 10-years to train their AI model. Historical data contained biases against women since there was a male dominance across the tech industry and men were forming 60% of Amazon’s employees. Therefore Amazon’s recruiting system incorrectly learnt that male candidates were preferable. It penalized resumes that included the word “women’s”, as in “women’s chess club captain.” Therefore, Amazon stopped using the algorithm for recruiting purposes. - Racial bias in healthcare risk algorithm

A health care risk-prediction algorithm that is used on more than 200 million U.S. citizens, demonstrated racial bias because it relied on a faulty metric for determining the need.

The algorithm was designed to predict which patients would likely need extra medical care, however, then it is revealed that the algorithm was producing faulty results that favor white patients over black patients.

The algorithm’s designers used previous patients’ healthcare spending as a proxy for medical needs. This was a bad interpretation of historical data because income and race are highly correlated metrics and making assumptions based on only one variable of correlated metrics led the algorithm to provide inaccurate results. - Bias in Facebook ads

There are numerous examples of human bias and we see that happening in tech platforms. Since data on tech platforms is later used to train machine learning models, these biases lead to biased machine learning models.

In 2019, Facebook was allowing its advertisers to intentionally target adverts according to gender, race, and religion. For instance, women were prioritized in job adverts for roles in nursing or secretarial work, whereas job ads for janitors and taxi drivers had been mostly shown to men, in particular men from minority backgrounds.

As a result, Facebook will no longer allow employers to specify age, gender or race targeting in its ads.

Will AI ever be completely unbiased?

Technically, yes. An AI system can be as good as the quality of its input data. If you can clean your training dataset from conscious and unconscious assumptions on race, gender, or other ideological concepts, you are able to build an AI system that makes unbiased data-driven decisions.

However, in real world, we don’t expect AI to ever be completely unbiased any time soon due to the same argument we provided above. AI can be as good as data and people are the ones who create data. There are numerous human biases and ongoing identification of new biases is increasing the total number constantly. Therefore, it may not be possible to have a completely unbiased human mind so does AI system. After all, humans are creating the biased data while humans and human-made algorithms are checking the data to identify and remove biases.

What we can do for AI bias is to minimize it by performing tests on data and algorithms and applying other best practices.

How to fix biases in machine learning algorithms

Firstly, if your data set is complete, you should acknowledge that AI biases can only happen due to the prejudices of humankind and you should focus on removing those prejudices from the data set. However, it is not as easy as it sounds.

A naïve approach is removing protected classes (such as sex or race) from data is to delete the labels that make the algorithm bias, yet, this approach may not work because removed labels may affect the understanding of the model and your results’ accuracy may get worse.

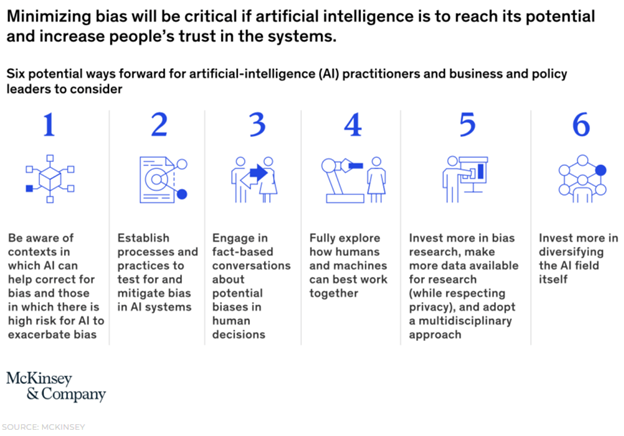

So there are no quick fixes to removing all biases but there are high level recommendations from consultants like Mckinsey highlighting the best practices of AI bias minimization:

Steps to fixing bias in AI systems:

- You should fully understand the algorithm and data to assess where the risk of unfairness is high

- You should establish a debiasing strategy that contains a portfolio of technical, operational and organizational actions:

- Technical strategy involves tools that can help you identify potential sources of bias and reveal the traits in the data that affects the accuracy of the model.

- Operational strategies included improving data collection processes using internal “red teams” and third party auditors. You can find more practices from Google AI’s research on fairness.

- Organizational strategy includes establishing a workplace where metrics and processes are transparently presented.

- As you identify biases in training data, you should consider how human-driven processes might be improved. Model building and evaluation can highlight biases that have gone noticed for a long time. In the process of building AI models, companies can identify these biases and use this knowledge to understand the reasons for bias. Through training, process design and cultural changes, companies can improve the actual process to reduce bias.

- Decide on use cases where automated decision making should be preferred and when humans should be involved.

- Research and development are key to minimizing the bias in data sets and algorithms. Eliminating bias is a multidisciplinary strategy that consists of ethicists, social scientists, and experts who best understand the nuances of each application area in the process. Therefore, companies should seek to include such experts in their AI projects.

- Diversity in the AI community eases the identification of biases. People that first notice bias issues are mostly users who are from that specific minority community. Therefore, maintaining a diverse AI team can help you mitigate unwanted AI biases.

Brookings – Artificial Intelligence and Bias: Four key challenges

Bias Built into Data

- Data that AI systems use as input can have built-in biases, despite the best efforts of AI programmers.

- The indirect influence of bias is present in plenty of types of data. For instance, evaluations of creditworthiness are determined by factors including employment history and prior access to credit – two areas in which race has a major impact.

- To take another example, imagine how AI might be used to help a large company set starting salaries for new hires. One of the inputs would certainly be salary history, but given the well-documented concerns regarding the role of sexism in corporate compensation structures, that could import gender bias into the calculations.

AI-Induced Bias

- Biases can be created within AI systems and then become amplified as the algorithms evolve.

- AI algorithms are not static. They learn and change over time. Initially, an algorithm might make decisions using only a relatively simple set of calculations based on a small number of data sources.

- As the system gains experience, it can broaden the amount and variety of data it uses as input and subject those data to increasingly sophisticated processing.

- An algorithm can end up being much more complex than when it was initially deployed. Notably, these changes are not due to human intervention to modify the code, but rather to automotive modifications made by the machine to its own behavior. In some cases, this evolution can introduce bias.

- Analogous phenomena have long occurred in non-AI contexts. But with AI, things are far more opaque, as the algorithm can quickly evolve to the point where even an expert can have trouble understanding what it is actually doing.

- The power of AI to invent algorithms far more complex than humans could create is one of its greatest assets – and, when it comes to identifying and addressing the sources and consequences of algorithmically generated bias, one of its greatest challenges.

Teaching AI Human Rules

- From the standpoint of machines, humans have some complex rules about when it is okay to consider attributes that are often associated with bias. Take gender: We would rightly deem it offensive (and unlawful) for a company to adopt an AI-generated compensation plan with one pay scale for men and a different, lower pay scale for women.

- AI algorithms trained in part using data in one context can later be migrated to a different context with different rules about the types of attributes that can be considered. In the highly complex AI systems of the future, we may not even know when these migrations occur, making it difficult to know when algorithms may have crossed legal or ethical lines.

Evaluating Cases of Suspected AI Bias

- There is no question that bias is a significant problem in AI. However, just because algorithmic bias is suspected does not mean it will prove to be present in every case.

- There will often be more information on AI-driven outcomes – e.g., whether a loan application was approved or denied; whether a person applying for a job was hired or not – than on the underlying data and algorithmic processes that led to those outcomes.

- While an accusation of AI bias should always be taken seriously, the accusation itself should not be the end of the story. Investigations of AI bias will need to be structured in a way that maximizes the ability to perform an objective analysis, free from pressures to arrive at any preordained conclusions.

European Commission Report: Ethics Guidelines for Trustworthy AI

Foundations of Trustworthy AI

AI ethics is a sub-field of applied ethics, focusing on the ethical issues raised by the development, deployment and use of AI. It’s central concern is to identify how AI can advance or raise concerns to the good life of individuals, whether in terms of quality of life, or human autonomy and freedom necessary for a democratic society.

Ethical reflection on AI technology can serve multiple purposes:

- It can stimulate reflection on the need to protect individuals and groups at the most basic level.

- It can stimulate new kinds of innovations that seek to foster ethical values.

- It can improve individual flourishing and collective wellbeing by generating prosperity, value creation and wealth maximization.

As with any powerful technology, the use of AI systems in our society raises several ethical challenges, for instance relating to their impact on people and society, decision-making capabilities and safety. If we are increasingly going to use the assistance of or delegate decisions to AI systems, we need to make sure these systems are fair in their impact on people’s lives, that they are in line with values that should not be compromised and able to act accordingly, and that suitable accountability processes can ensure this.

Fundamental rights as a basis for Trustworthy AI

- Respect for human dignity.

Entails that all people are treated with respect due to them as moral subjects, rather than merely as objects to be sifted, sorted, scored, herded, conditioned, or manipulated. AI systems should hence be developed in a manner that respects, serves, and protects humans’ physical and mental integrity, personal and cultural sense of identity, and satisfaction of their essential needs. - Freedom of the individual.

Requires mitigation of (in)direct illegitimate coercion, threats to mental autonomy and mental health, unjustified surveillance, deception, and unfair manipulation. - Respect for democracy, justice, and the rule of law.

AI systems should serve to maintain and foster democratic processes and respect the plurality of values and life choices of individuals. AI systems must not undermine democratic processes, human deliberation, or democratic voting systems. AI systems must also embed a commitment to ensure that they do not operate in ways that undermine the foundational commitments upon which the rule of law is founded, mandatory laws and regulation, and to ensure due process and equality before the law. - Equality, non-discrimination, and solidarity.

This goes beyond non-discrimination, which tolerates the drawing of distinctions between dissimilar situations based on objective justifications. In an AI context, equality entails that the system’s operations cannot generate unfairly biased outputs (e.g. the data used to train AI systems should be as inclusive as possible, representing different population groups). - Citizens’ rights.

Citizens benefit from a wide array of rights, including the right to vote, the right to good administration or access to public documents, and the right to petition the administration. AI systems offer substantial potential to improve the scale and efficiency of government in the provision of public goods and services to society. At the same time, citizens’ rights could also be negatively impacted by AI systems and should be safeguarded. When the term “citizens’ rights” is used here, this is not to deny or neglect the rights of third-country nationals and irregular (or illegal) persons who also have rights under international law, and – therefore – in the area of AI systems.

Ethical Principles in the Context of AI Systems

- The principle of respect for human autonomy

Ensuring respect for the freedom and autonomy of human beings. Humans interacting with AI systems must be able to keep full and effective self-determination over themselves, and be able to partake in the democratic process. Ai systems should not unjustifiably subordinate, coerce, deceive, manipulate, condition or herd humans. Instead, they should be designed to augment, complement and empower human cognitive, social and cultural skills. - The principle of prevention of harm

This entails the protection of human dignity as well as mental and physical integrity. AI systems and the environments in which they operate must be safe and secure. They must be technically robust and it should be ensured that they are not open to malicious use. Particular attention must also be paid to situations where AI systems can cause or exacerbate adverse impacts due to asymmetries of power or information, such as between employers and employees, businesses and consumers or governments and citizens. - The principle of fairness

Fairness has both a substantive and procedural dimension. The substantive dimension implies a commitment to: ensuring equal and just distribution of both benefits and costs, and ensuring that individuals and groups are free from unfair bias, discrimination and stigmatization. Equal opportunity in terms of access to education, goods, services, and technology should also be fostered. Moreover, the use of AI systems should never lead to people being deceived or unjustifiably impaired in their freedom of choice. The procedural dimension of fairness entails the ability to contest and seek effective redress against decisions made by AI systems and by the humans operating them. In order to do so, the entity accountable for the decision must be identifiable, and the decision-making process should be explicable.

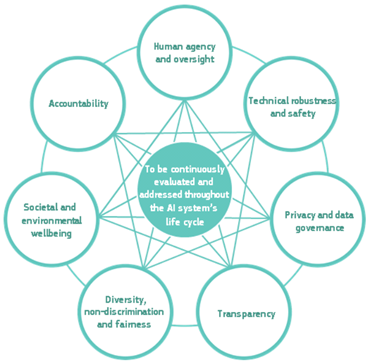

Requirements of Trustworthy AI

Difference groups of stakeholders have different roles to play in ensuring that the requirements are met:

- Developers should implement and apply the requirements to design and development processes;

- Deployers should ensure that the systems they use and the products and services they offer meet the requirements;

- End-users and the broader society should be informed about these requirements and able to request that they are upheld.

Examples of requirements

Human agency and oversight

Supporting human autonomy and decision-making, as prescribed by the principle of respect for human autonomy. This requires that AI systems should both act as enablers to a democratic, flourishing and equitable society by supporting the user’s agency and foster fundamental rights and allow for human oversight.

AI systems can equally enable and hamper fundamental rights. In situations where such risks exist, a fundamental rights impact assessment should be undertaken. This should be done prior to the systems development and include an evaluation of whether those risks can be reduced or justified as necessary in a democratic society in order to respect the rights and freedoms of others.

Users should be able to make informed autonomous decisions regarding AI systems. They should be given the knowledge and tools to comprehend and interact with AI systems to a satisfactory degree and, where possible, be enabled to reasonably self-assess or challenge the system. AI systems should support individuals in making better, more informed choices in accordance with their goals.

Human oversight helps ensuring that an AI system does not undermine human autonomy or causes other adverse effects. Oversight may be achieved through governance mechanisms such as a human-in-the-loop (HITL), human-on-the-loop (HOTL), or human-in-command (HIC) approach. This can include the decision not to use an AI system in a particular situation, to establish levels of human discretion during the use of the system, or to ensure the ability to override a decision made by a system.

Technical robustness and safety

Technical robustness is closely linked to the principle of prevention of harm. It requires

- Privacy and data governance

- Transparency

- Diversity, non-discrimination, and fairness

- Societal and environmental wellbeing

- Accountability