There is no wasted time studying the variety of algorithms at our disposal. After all, their relevance is determined by factors both within and outside our control. However, the growing variety of data from both communication and collaboration platforms brings a fresh series of challenges for both information governance and discovery. Vendors will continue to innovate if new data types keep appearing and/or machine learning technology becomes increasingly more accessible. Notably, the next five years will see a growth in the development and application of various deep learning methodologies, including those related to image recognition, such as Covnets (CNNs). Understanding exactly what these algorithms are and how they work is essential to any leader driving innovation from within.

Below is a list of key AI terms that everyone working with technology and data should become familiar with. Whilst this is not a complete list, it provides a solid foundation on a few key terms related to a core subfield of AI, Machine Learning.

Machine Learning:

The subfield of computer science that gives computers the ability to learn without being explicitly programmed. Evolved from the study of pattern recognition and computational learning theory in artificial intelligence, machine learning explores the study and construction of algorithms that can learn from and make predictions on data – such algorithms overcome following strictly static program instructions by making data-driven predictions or decisions, through building a model from sample inputs. Machine learning is employed in a range of computing tasks where designing and programming explicit algorithms is unfeasible; example applications include spam filtering, optical character recognition (OCR), search engines and computer vision.

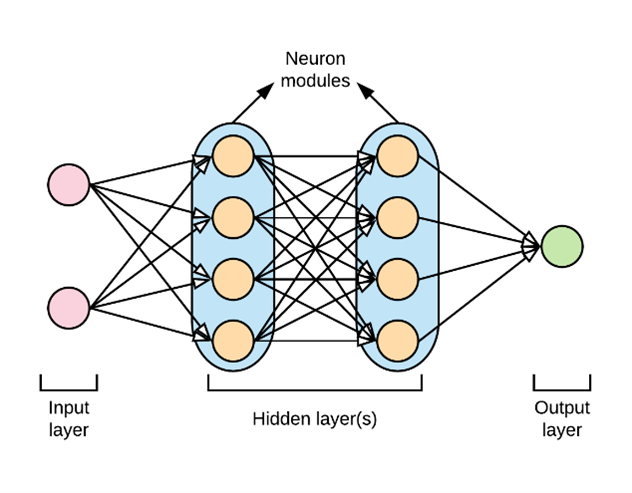

Artificial Neural Networks (ANN):

Neural Networks (also referred to as connectionist systems) are a computational approach which is based on a large collection of neural units loosely modeling the way the brain solves problems with large clusters of biological neurons connected by axons. Each neural unit relates to many others in the following layer. The links that connect neural units can be enforcing or inhibitory in their effect on the activation state of connected neural units. Each individual neural unit may have a summation function which combines the values of all its inputs together. There may be a threshold function or limiting function on each connection and on the unit itself such that it must surpass it before it can propagate to other neurons. These systems are self-learning and trained rather than explicitly programmed.

Backpropagation:

Backpropagation, an abbreviation for “backward propagation of errors”, is a common method of training ANN’s used in conjunction with an optimization method such as gradient descent. The method calculates the gradient of loss function with respect to all the weights in the network, so that the gradient is fed to the optimization method which in turn uses it to update the weights, in an attempt to minimize the loss function.

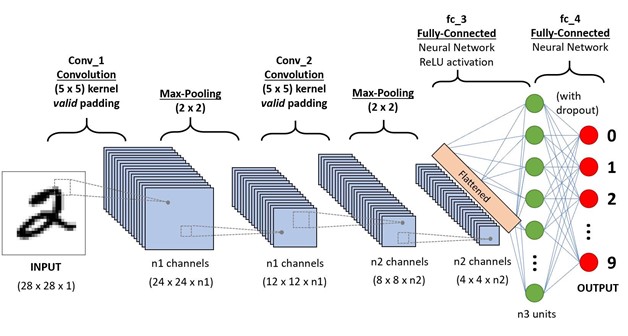

Convolutional Neural Networks:

In convolutional neural networks (CNNs), the computer visualizes an input image as an array of pixels. The better the image resolution, the better the training data. The purpose of CNN’s is to enable machines to view the world and perceive it as close to the way humans do. The applications are broad, including image & video recognition, image analysis and classification, media recognition, recommendation systems, and natural language processing (among others).

The CNN has two components: feature extraction and classification. The process starts with the convolutional layer, which extracts features from the input image. This preserves the relationship between pixels by learning features using small squares of input data. The convolution of an image with different filters can perform operations such as edge detection, blur and sharpen by applying filters. Next come the strides, or the number of pixels shifting over the input matrix. If you had a picture of a tiger, this is the part where the network would recognize its teeth, its stripes, two ears, and four legs. During classification, connected layers of neurons serve as a classifier on top of the extracted features. Each layer assigns a probability for the object on the image being a tiger.

Deep Learning:

Deep learning (also known as deep structured learning, hierarchical learning or deep machine learning) is a branch of machine learning based on a set of algorithms that attempt to model high level abstractions in data by using a deep graph with multiple processing layers, composed of multiple linear and non-linear transformations. Deep learning has been characterized as a buzzword, or rebranding or neural networks.

Generative Adversarial Networks:

GANs are a deep-learning-based generative model. More generally, GANs are a model architecture for training a generative model, and it is most common to use deep learning models in this architecture. Highlighted above, the GAN model architecture involved two sub-models: a generative model for generating new examples, and a discriminator model for classifying whether generated examples are real, from the domain, or fake, generated by the generator model. GANs are based on a game theoretic scenario in which the generator network must compete against an adversary. The generator network directly produces examples. Its adversary, the discriminator network, attempts to distinguish between samples drawn from the training data and samples drawn from the generator.

Supervised Learning:

In supervised learning, the computer is taught by example. It learns from past data and applies the learning to present data to predict future events. In this case, both input and the desired output data provide help to the prediction of future events. For accurate predictions, the input data is labeled or tagged as the right answer. It is important to remember that all supervised learning algorithms are essentially complex algorithms, categorized as either classification or regression models.

- Classification models are used for problems where the output variable can be categorized, such as “Yes” or “No”, or “Relevant” or “Not Relevant”. Classification Models are used to predict the category of the data. Real-life examples include spam detection, sentiment analysis, scorecard prediction of exams, etc.

- Regressions models are used for problems where the output variable is a real value such as a unique number, dollars, salary, weight or pressure, for example. It is most often used to predict numerical values based on previous data observations. Some of the more familiar regression algorithms include linear regression, logistic regression, polynomial regression, and ridge regression.

Practical applications of supervisions learning algorithms include text categorization, face detection, signature recognition, document review, spam detection, weather forecasting, predicting stock prices, among others.

Unsupervised Learning:

Unsupervised learning is the method that trains machines to use data that is neither classified nor labeled. It means no training data can be provided and the machine is made to learn by itself. The machine must be able to classify the data without any prior information about the data. The idea is to expose the machines to large volumes of varying data and allow it to learn from that data to provide insights that were previously unknown and to identify hidden patterns. As such, there aren’t necessarily defined outcomes from supervised learning algorithms. Rather, it determines what is different or interesting from the given dataset.

The machine needs to be programmed to learn by itself. The computer needs to understand and provide insights from both structured and unstructured data.

- Clustering is one of the most common unsupervised learning methods. The method of clustering involves organizing unlabeled data into similar groups called clusters. Thus, a cluster is a collection of similar data points into a cluster.

- Anomaly detection is the method of identifying rare items, events or observations which differ significantly from the majority of the data. We generally look for anomalies or outliers in data because they are suspicious. Anomaly detection is often utilized in bank fraud and medical error detection.

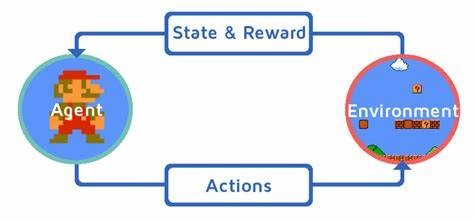

Reinforcement Learning:

Unlike other types of machine learning, reinforcement learning doesn’t require a lot of training examples. Instead, reinforcement learning models are given an environment, a set of actions they can perform, and a goal or a reward they must pursue. Reinforcement learning is a behavioral learning model where the algorithm provides data analysis feedback, directing the user to the best result.

An example of reinforced learning is the recommendation on YouTube, for example. After watching a video, the platform will show you similar titles that you might like. However, suppose you start watching the recommendation and do not finish it. In that case, the machine understands that the recommendation would not be a good one and will try another approach next time.

Reinforcement is done with rewards according to the decisions made; it is possible to learn continuously from interactions with the environment at all times. With each correct action, we will have positive rewards and penalties for incorrect decisions. In the industry, this type of learning can help optimize processes, simulations, monitoring, maintenance, and the control of autonomous systems.

This blog is a refresher on Chip Delany’s previous article, Kangaroo Court: AI Education – Machine Learning.

Learn more about ACEDS e-discovery training and certification, and subscribe to the ACEDS blog for weekly updates.