Four Essential Principles of Quantum Computation

The answer to the question “what is a quantum computer?” encompasses quantum mechanics (QM), quantum information theory (QIT) and computer science (CS). It is a device that leverages specific properties described by quantum mechanics to perform computation. We are entering a new era of computation that will catalyze discoveries in science and technology. Novel computing platforms will probe the fundamental laws of our universe and aid in solving hard problems that affect all of us. In this post I briefly outline four essential principles of quantum mechanical systems: superposition, the Born rule, entanglement, and reversible computation.

Quantum computing is part of the larger field of quantum information sciences (QIS). All three branches of QIS – computation, communication, and sensing – are advancing at rapid rates and a discovery in one area can spur progress in another. Quantum communications leverages the unusual properties of quantum systems to transmit information in a manner that no eavesdropper can read. This field is becoming increasingly critical as quantum computing drives us to a post-quantum cryptography regime. Quantum sensing is a robust field of research which uses quantum devices to move beyond classical limits in sensing magnetic and other fields. For example, there is an emerging class of sensors for detecting position, navigation, and timing (PNT) at the atomic scale. These micro-PNT devices can provide highly accurate positioning data when GPS is jammed or unavailable.

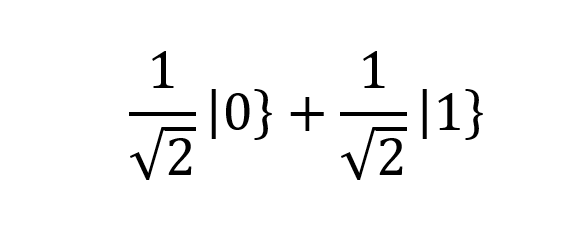

Every classical (that is, non-quantum) computer can be described by quantum mechanics since quantum mechanics is the basis of the physical universe. However, a classical computer does not take advantage of the specific properties and states that quantum mechanics affords us in doing its calculations. According to the principles of quantum mechanics, systems are set to a definite state only once they are measured. Before a measurement, systems are in an indeterminate state; after we measure them, they are in a definite state. If we have a system that can take on one of two discrete states when measured, we can represent the two states in Dirac notation as |0} and |1}. We can then represent a superposition of states as a lineal combination of these states, such as

The Superposition Principle: The linear combination of two or more state vectors is another state vector in the same Hilbert space and describes another state of the system.

Consider a property of light that illustrates a superposition of states. Light has an intrinsic property called polarization which we can use to illustrate a superposition of states. In almost all the light we see in everyday life – from the sun, for example – there is no preferred direction for the polarization. Polarization states can be selected by means of a polarizing filter, a thin film with an axis that only allows light with polarization parallel to that axis to pass through. With a single polarizing filter, we can select one polarization of light. E.g., vertical polarization or horizontal polarization. Together, these states form a basis for any politization of light. That is, any polarization state can be written as a linear combination of these states.

German physicist and mathematician Max Born demonstrated in his 1926 paper that in a superposition of states, the modulus squared of the amplitude of the state is the probability of that state resulting after measurement. Furthermore, the sum of the squares of the amplitudes of all possible states in the superposition is equal to 1. While in the polarization example above we have a 50/50 split in probability for each of two states, if we examined some other physical system, it may have a 75/25 split or some other probability distribution. One critical difference between classical and quantum mechanics is that amplitudes (not probabilities) can be complex numbers.

As if quantum superposition were not odd enough, quantum mechanics describes a specific kind of superposition which stretches our imagination even further: entanglement. In 1935, when Einstein worked with Podolsky and Rosen to publish their paper on quantum entanglement, their aim was to attack the edifice of quantum mechanics (this paper is now known as EPR). Even though Einstein earned the Nobel Prize for his 1905 work on the quantum nature of the photoelectric effect, he nevertheless railed against the implications of QM until his later years.

EPR showed that if you take two particles that are entagled with each other and then measure one of them, this automatically triggers a correlated state of the second – even if the two are at a great distance from each other; this was the seemingly illogical results that EPR hoped to use to show that QM itself must have a flaw. Ironically, we now consider entanglement to be a cornerstone of quantum mechanics. Entanglement occurs when we have a superposition of states that is not separable.

Entanglement: Two systems are in a special case of quantum mechanical superposition called entanglement if the measurement of one system is correlated with the state of the other system in a way that is stronger than correlations in the classical world. In other words, the states of the two systems are not separable.

So far we have covered two core ideas of quantum mechanics – superposition and entanglement. There is another concept which is not covered as often – the physicality of information. German-American physicist Rolf Landauer established a new line of inquiry when he asked the following question: The search for fast and more compact computing circuits leads directly to the question: what are the ultimate physical limitations on the progress in this direction? …we can show, or at the very least strongly suggest, that information processing is inevitably accompanied by a certain minimum amount of heat generation. In other words, is there a lower bound to the energy dissipated in the process of a basic unit of computation?

Reversibility of Quantum Computation: All operators used in quantum computation other than for measurement must be reversible.

Landauer defined logical irreversibility as a condition in which “the output of a device does not uniquely define the inputs.” He then claimed that “logical irreversibility …in turn implies physical irreversibility, and the latter is accompanied by dissipative effects.” This follows from the second law of thermodynamics which states that the total entropy of a system cannot decrease and, more specifically, must increase with an irreversible process. In classical computing we make use of irreversible computations. In quantum computing, we limit ourselves to reversible logical operations. This requirement derives from the nature of irreversible operations: if we perform an irreversible operation, we have lost information and therefore a measurement. Our computation cycle then will be done and we can no longer continue with our program. Instead, by limiting all gates to reversible operators, we may continue to apply operator to our set of qubits as long as we can maintain coherence in the system. When we say reversible, we are assuming a theoretical noiseless quantum computer.

Further

In 1996, David DiVincenzo and several researchers in the field formalized what constituted a quantum computer and computation. DiVincenzo outlined the key criteria of a quantum computer in this manner:

- A scalable physical system with qubits that are distinct from one another and the ability to count exactly how many qubits there are in the system. The system can be accurately represented by a Hilbert space.

- The ability to initialize the state of any qubit to a definite state in the computational basis. For example, setting all qubits to state |0} if the computational basis vectors are |0} and |1}.

- The system’s qubits must be able to hold their state. This means that the system must be isolate from the outside world, otherwise the qubits will decohere. Some decay of state is allowed. In practice, the system’s qubits must hold their state long enough to apply the next operator with assurance that the qubits have not changed state due to outside influences between operations.

- The system must be able to apply a sequence of unitary operators to the qubit states. The system must also be able to apply a unitary operator to two qubits at once. This entails entanglement between those qubits. As DiVincenzo state in his paper, “…entanglement between different parts of a quantum computer is good; entanglement between the quantum computer and its environment is bad, since it corresponds to decoherence”.

- The system is capable of making “strong” measurements of each qubit. By strong measurement, DiVincenzo means that the measurement says, “which orthogonal eigenstate of some particular Hermitian operator the quantum state belongs to, while at the same time projecting the wavefunction of the system irreversibly into the corresponding eigenfunction.” This means that the measuring technique in the system actually does measure the state of the qubit for the property being measured and leaves the qubit in that state. DiVincenzo wants to prevent systems that have weak measurement, in other words, measuring techniques that do not couple with the qubit sufficiently to render it in that newly measured state. At the time he wrote the paper, many systems did not have sufficient coupling to guarantee projection into the new state.

Learn more about ACEDS e-discovery training and certification, and subscribe to the ACEDS blog for weekly updates.